I recently ran a lot of tests to understand how AI can actually improve people’s lives. Beyond being a “stochastic parrot” that can be used for almost anything language-related, I wanted to explore its boundaries—if there are any.

So I started diving into the world of Agentic AI, like in my last post about n8n.io, where I did some initial experiments. This time, I wanted to take a more serious approach and try something completely different. Since I still consider myself (at least when asked) pretty good at software development, I decided to explore AI-assisted software development.

A quick summary up front: What I’ve seen and experienced simply blew me away.

Vibe-Coding? Not quite.

I thought I was doing “Vibe-Coding” – just giving the AI a rough idea and letting it handle the rest, without touching a single line of code myself. But I wasn’t convinced, and I probably didn’t do it right. Thanks to a former colleague, I became intrigued by cline.bot for AI-assisted coding. That’s how I ended up doing Context Engineering – or at least what I consider context engineering.

Management Summary

Context engineering is like working with a bloody fast beginner – someone who helps you get through the engineering part faster than anything else. But remember: AI is still just a “stochastic parrot”. It repeats what it learned from countless coding snippets online (or wherever they come from). So you’d better give it the most precise instructions possible.

Can it solve your highly specialized task with a fancy algorithm? Probably not. But for common problems and applications that are just new variations of existing solutions, AI is incredibly useful.

What Is Context Engineering?

Since I wouldn’t be doing AI justice if I didn’t consult my favorite assistant (mistral.ai), I asked Le Chat for a definition. Here’s the summary:

Context engineering involves shaping the environment, background, or surrounding information to improve the relevance, accuracy, and effectiveness of communication or computational processes. In AI, it refers to structuring input prompts, background knowledge, or conversational history to guide models (like LLMs) toward more useful, accurate, or contextually appropriate outputs.

The Setup

I used Visual Studio Code with two key plugins:

- cline.bot as the main AI coding agent.

- GitHub Copilot for additional challenges.

With cline, the choice of model significantly impacts code quality—who would’ve thought? After some experimentation, I found the best setup for me:

- Anthropic’s Claude-Sonnet-4 for the plan phase (higher quality, but more expensive).

- A cheaper model (like Google Gemini) for the act phase (where the actual coding happens).

Wait, plan and act?

Cline lets you split your workflow into two parts:

- Plan: High-level reasoning (using Claude).

- Act: Implementation (using Gemini).

(More on costs later—spoiler: It adds up fast!)

How to Start

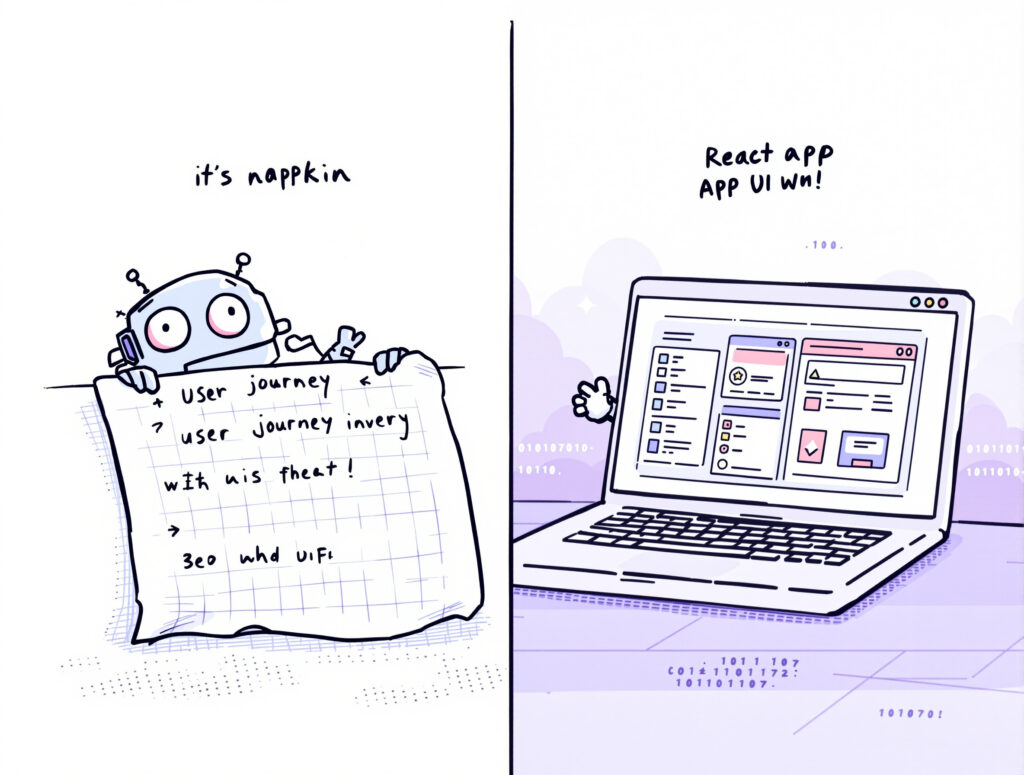

I began with a simple idea: Let’s challenge AI-assisted coding (though I didn’t realize I was doing context engineering yet).

- I started a chat and asked for a user journey for an application.

- After sketching it out, I told the AI:

“Draw me some scribble screens to go with it.”

Honestly, I expected nothing usable. But after pasting the user journey and rough screenshots into a folder and prompting:

“Take a look at the user-journey folder—it contains a user-journey.md file and some screen scribbles. Create a React app for it.”

The result? A working prototype in 10 minutes—better structured than my own sketches.

Where to Go From There?

Once the prototype was ready, I pushed further:

“Did you document the code already?”→ 2 minutes later, I had clear, readable documentation.- Next, I added features and tried TDD (Test-Driven Development). It worked well, but prompting for TDD every time got tedious.

Solution: Rules.

You can define rules for cline (e.g., “Always document new code” or “Write unit tests for new features”). I ended up with 7 rule files for tests, architecture, docs, etc.

My Context Window Is Exploding!

Developing means adding a lot of context—which clogs the conversation. Cline’s solution? A memory bank.

- A collection of files (README, changelog, etc.) that provide persistent context.

- Keeps the conversation focused and reduces repetitive explanations.

The Rules of Engagement

AI agents are supporting actors, not the main act. Think of them as highly capable junior developers:

- They handle the heavy lifting but need guidance.

- They sometimes cut corners—you must review their work.

- They free you up for higher-level tasks (e.g., talking to customers while the AI codes).

The gain? You can manage multiple repos at once, delegating implementation while you focus on strategy.

What’s Next?

The journey into the world of Agentic AI has only just begun. Here are some questions and topics I want to explore next—and that might interest you too:

- Model Behavior Comparison:

How do Claude, Gemini, Grok or Gpt5 differ in plan and act phases?

(Example: Is Claude better for architectural decisions while Gemini prototypes faster?) - Agent vs. Agent:

When is cline.bot worth it, when should you use GitHub Copilot, and when should you try experimental tools like AutoGPT or Devin?

(Spoiler: Copilot excels at code completion, cline.bot at complex workflows.) - Reusable Workflows:

Are there standard processes for Context Engineering (e.g., predefined rules for TDD, documentation, or API integration)?

(Imagine: An “Agentic AI Cookbook” for typical development tasks.) - Agent Collaboration:

Can multiple AI agents work on a project in parallel—and how do you coordinate them?

(One agent for frontend, one for backend, one for tests—who orchestrates it all?) - Limits of Autonomy:

Where does Agentic AI still fail?

(Highly specialized algorithms? Creative design? Or just vague requirements?) - Costs & Efficiency:

How can you keep the resource hunger (token costs, compute time) under control?

(Tips: Optimize memory banks, use cheaper models for routine tasks.)

The thread is spun—now it’s time to experiment.

(And yes, I’ll report back on what comes of it. Stay tuned!)

Like all my recent posts, this article was drafted with the help of AI (specifically, Le Chat by Mistral AI) and then proofread by the same. The ideas, experiments, and opinions are entirely my own—but the AI helped sharpen the language, structure, and (hopefully) readability. Consider it a collaboration between human curiosity and machine precision.

Schreibe einen Kommentar